Predictive modeling is one of the most effective forecasting and decision-making techniques. I’ve been refining these modeling techniques for years in industrial applications. You’ll learn how the predictive modeling techniques operate and why they’re so effective at improving process and equipment performance. So, let’s discuss the core building blocks and applications that make predictive modeling a continuous improvement game-changer.

Predictive Modeling Techniques Overview

Predictive modeling is a broad term for any modeling technique that uses historical data to make predictions about future outcomes. These techniques have significantly improved decision making in virtually every industry, so mastering them is the number one thing you can do to optimize your career. I’ve dedicated years of my life to mastering and implementing these techniques, and I can tell you they’re more than just fancy math – they’re truly the secret sauce behind many businesses. There are four main types of predictive modeling:

- Regression models

- Classification models

- Time series models

- Clustering models

Each modeling type is designed for a specific use case and has its own strengths and weaknesses. At a high level, a predictive model has the following components:

- Input variables (features)

- Output variable (target)

- An algorithm or a mathematical function

- Training data

- Testing data

These components work together to produce a model that can accurately forecast an outcome. Predictive maintenance is one of the many applications of predictive modeling techniques, particularly in the manufacturing industry. Predictive models are used in virtually any industry, including:

- Finance (risk assessment, credit scoring)

- Healthcare (disease prediction, patient outcomes)

- Marketing (customer segmentation, campaign effectiveness)

- Manufacturing (equipment maintenance, failure prediction)

- Retail (demand forecasting, inventory management)

The most important aspect of any predictive model is the data quality and preprocessing. If you put garbage data into the model, you’ll get garbage predictions out of it. You’ll quickly discover that mastering these models will make you a significantly better decision maker. These models provide insights that you would never be able to extract through intuition alone.

Linear Regression Models

Linear regression is the most common predictive model and for good reason. It’s simple and straightforward, yet very powerful. I’ve personally used it hundreds of times to understand relationships between variables.

Simple linear regression uses one independent variable and one dependent variable. Multiple linear regression, on the other hand, uses two or more independent variables.

The model assumes a linear relationship between the variables, which is a pretty big assumption. Nevertheless, it’s a good starting point most of the time.

The key assumptions of linear regression are:

- Linearity

- Independence

- Homoscedasticity

- Normality

If any of these assumptions don’t hold, the results are likely not reliable.

We use metrics like R-squared, Mean Squared Error (MSE), and Root Mean Squared Error (RMSE) to evaluate linear regression models. These metrics tell us how well the model fits the data.

Interpreting coefficients is a critical skill. The coefficients tell you how much the dependent variable will increase by for a one unit increase in the independent variable. P-values indicate whether the relationship is statistically significant.

You’ll find linear regression extremely helpful for understanding relationships in your data. It’s also a great tool for making a simple initial prediction and establishing a baseline to compare more sophisticated models against.

Decision Trees and Random Forests

Decision trees are simple, intuitive models that make decisions by asking a series of questions, much like a flowchart to arrive at a prediction.

At a high level, a decision tree has a root node that splits into branches based on the most important features. This process repeats until we reach leaf nodes, at which point our decision tree makes a prediction.

Splitting criteria tell the tree which questions to ask, and common splitting criteria include Gini impurity and information gain.

Advantages of decision trees include:

- Simplicity and ease of understanding

- Ability to handle both numerical and categorical data

- Low data preparation requirements

Disadvantages include:

- Tendency to produce very complex trees

- High likelihood of overfitting

- Unstable, as a small change in the data can produce an entirely different tree

Random forests solve many of these problems by training an ensemble of decision trees. They’re more robust and less likely to overfit.

In practice, I often find random forests to be more accurate than a single decision tree. They’re also great for feature importance, as you can easily determine which variables have the most predictive power.

Use tree-based models when you want a machine learning model that produces interpretable results. They’re a great option when you need to explain why the model made a specific decision to a non-technical audience.

Neural Networks and Deep Learning

Neural networks are designed after the human brain and are made up of interconnected nodes (or “neurons”) arranged in layers.

The basic architecture consists of an input layer, one or more hidden layers, and an output layer.

Each neuron has an activation function that processes its inputs. Common activation functions include ReLU, sigmoid, and tanh.

Backpropagation is the core process for training neural networks. It adjusts the connection weights to minimize prediction errors.

Deep learning refers to neural networks with many hidden layers, and these architectures are able to learn intricate data patterns.

Convolutional Neural Networks (CNNs) are fantastic for image recognition tasks, while Recurrent Neural Networks (RNNs) are great for sequential data, such as text and time series data.

Hyperparameter tuning is essential to achieve optimal performance. Key hyperparameters include the learning rate, the number of hidden layers and neurons, batch size, and regularization parameters.

You’ll find neural networks to be an extremely powerful tool for solving complex problems. They have been the driving force behind many of the recent breakthroughs in areas like computer vision and natural language processing.

Time Series Analysis and Forecasting

Time series analysis is the task of identifying patterns in data over time. This is essential if you want to forecast future trends.

Time series data has four key components:

- Trend

- Seasonality

- Cyclical patterns

- Random noise

ARIMA (AutoRegressive Integrated Moving Average) models are popular methods for time series forecasting. SARIMA is simply ARIMA with seasonal components.

These models require data to be stationary, meaning the statistical properties of the series do not change over time.

The process of decomposing trend and seasonality helps us identify the patterns in a time series. This process breaks down a time series into its underlying components.

How to handle non-stationary data is the most common challenge you’ll face. There are various techniques you can use, like differencing and log transforms, to make data stationary.

You can apply time series analysis to sales forecasting, demand prediction, and stock price analysis, making it a very versatile method for understanding how something changes over time.

Logistic Regression for Classification

Logistic regression is one of the most common algorithms for binary classification. Despite its name, logistic regression is actually a classification algorithm, not a regression algorithm.

The model predicts the probability that an instance belongs to a given class. It then applies the logistic function to convert its prediction into a value between 0 and 1.

Binary logistic regression involves only two classes, while multiclass logistic regression involves more than two classes.

The odds ratio is the most important concept for interpreting logistic regression results. It explains how the odds of the outcome change with a one-unit increase in a predictor variable.

The model has a few assumptions:

- Observations are independent.

- The relationship between the predictors and the logit is linear.

- There is no multicollinearity among the predictors.

You can run a few diagnostics to check whether these assumptions are met.

You can use L1 (Lasso) and L2 (Ridge) regularization to avoid overfitting by including a penalty in the loss function, which encourages the optimization algorithm to select simpler models.

You’ll use logistic regression for tasks such as spam detection, customer churn, and disease identification. Logistic regression is especially helpful when you want to produce a set of interpretable results.

Support Vector Machines (SVM)

Support Vector Machines are a high-performing algorithm for both classification and regression tasks. They do this by finding the hyperplane that best separates the classes.

The Linear SVM is good when the data is linearly separable. However, you can use kernel functions to use the SVM algorithm in more complex datasets.

Kernel functions are a way to apply an SVM with a non-linear decision boundary, as it will transform the input space. Common examples of kernels include:

- Polynomial

- Radial Basis Function (RBF)

- Sigmoid

The margin is a key concept in SVM. The margin is the distance between the decision boundary and the closest data points, which in SVM are called support vectors.

SVM for regression (SVR) is similar, as it tries to fit as many instances as possible within a certain margin.

You’ll likely use the SVM algorithm on high-dimensional data, as it excels in text classification, image recognition, and bioinformatics.

Model Selection and Evaluation

Selecting the right model is important in effective predictive modeling. However, it’s not just about accuracy – you also want to evaluate models on other factors such as interpretability, computational efficiency, and ease of deployment.

Cross-validation is a critical model evaluation technique. It helps ensure the model doesn’t overfit the data by evaluating the model on unseen data.

Common cross-validation techniques include:

- K-fold cross-validation

- Stratified K-fold cross-validation

- Leave-one-out cross-validation

The performance metrics you use depend on the type of problem:

For regression:

- Mean Squared Error (MSE)

- Root Mean Squared Error (RMSE)

- Mean Absolute Error (MAE)

- R-squared

For classification:

- Accuracy

- Precision

- Recall

- F1-score

- Area Under the ROC Curve (AUC-ROC)

The bias-variance tradeoff is one of the most fundamental concepts in machine learning. High bias leads to underfitting, while high variance leads to overfitting.

Ensemble methods are combinations of multiple models to improve model performance. The most common techniques are:

- Bagging

- Boosting

- Stacking

You’ll quickly learn that prudent model selection and evaluation is absolutely critical to building trustworthy predictive models. It’s an iterative process that requires patience and a keen eye for detail.

Feature Engineering and Selection

Feature engineering is the process of creating new features from existing data, and it’s often the key to maximizing the potential of your models.

Strategies to create new features include:

- Combining existing features

- Extracting information from text or dates

- Binning continuous variables

- Creating interaction terms

Dimensionality reduction techniques are helpful when working with high-dimensional datasets. Two of the most common dimensionality reduction techniques are Principal Component Analysis (PCA) and t-SNE.

Feature importance and selection techniques help you determine which features to keep. Doing so can make your model more accurate and easier to interpret.

Some of the most common feature importance and selection techniques are:

- Correlation analysis

- Random forest feature importance

- Recursive feature elimination

Dealing with categorical variables is another important skill. You can use:

| Technique | Description |

|---|---|

| One-hot encoding | Create a binary column for each category. |

| Label encoding | Assign a unique integer to each category. |

| Target encoding | Replace each category with the target mean. |

You’ll learn that much of the skill in machine learning implementation comes from effective feature engineering. It’s where your domain knowledge of the problem often has the biggest impact.

Handling Imbalanced Datasets

Imbalanced datasets are a common issue you’ll encounter when solving real-world problems. An imbalanced dataset is one where you have one class that vastly outnumbers the other classes.

Oversampling adds more samples of the minority class. Undersampling removes samples from the majority class.

SMOTE (Synthetic Minority Over-sampling Technique) is a popular oversampling technique that generates synthetic examples of the minority class. It’s a bit more advanced than just duplicating the examples of the minority class.

Other oversampling methods for imbalanced datasets include:

- ADASYN (Adaptive Synthetic)

- Tomek links

- Cluster-based over-sampling

Cost-sensitive learning is when you assign different misclassification costs to different classes. This encourages the model to pay more attention to the minority class.

The best metrics to use for imbalanced data are:

- Balanced accuracy

- F1-score

- Precision-Recall curve

- ROC AUC

Addressing imbalanced datasets properly is a key step in many real-world use cases. It’s particularly important in fraud detection, as well as any problem related to diagnosing a rare disease or detecting a rare event.

Deployment and Monitoring of Predictive Models

Deploying a model is only the start of the process. Monitoring and maintaining the model over time is essential for long-term success.

You can deploy a model using different strategies, including:

- Batch prediction

- Real-time prediction

- Edge deployment

Real-time prediction is necessary in scenarios where you require an immediate response from the model. Batch prediction is great when latency is less of an issue.

Model versioning is the practice of managing different versions of your model. This is critical for reproducibility and the ability to roll back to a previously deployed model if necessary.

A/B testing allows you to compare the performance of two different models in a live setting. This is a powerful concept for continuous model improvement.

Monitoring model performance involves tracking certain key metrics over time. This helps you identify problems like concept drift, which occurs when the relationship in your data changes over time.

It’s also possible for the model to experience drift, which is when the model’s predictions slowly become less accurate over time. This can happen because of:

- A change in the distribution of your data

- A change in the relationship between your features and the target

- An error in your data collection or preprocessing steps

To guard against model drift, you should retrain the model and redeploy it from time to time.

In summary, deploying and monitoring the model well is critical to realizing the full value of your predictive models. This is an iterative process that requires constant vigilance and iteration.

When it comes to maintaining equipment performance, it’s important to understand the difference between predictive vs preventive maintenance. While preventive maintenance is scheduled based on time or usage, predictive maintenance uses data and analytics to predict when maintenance is needed.

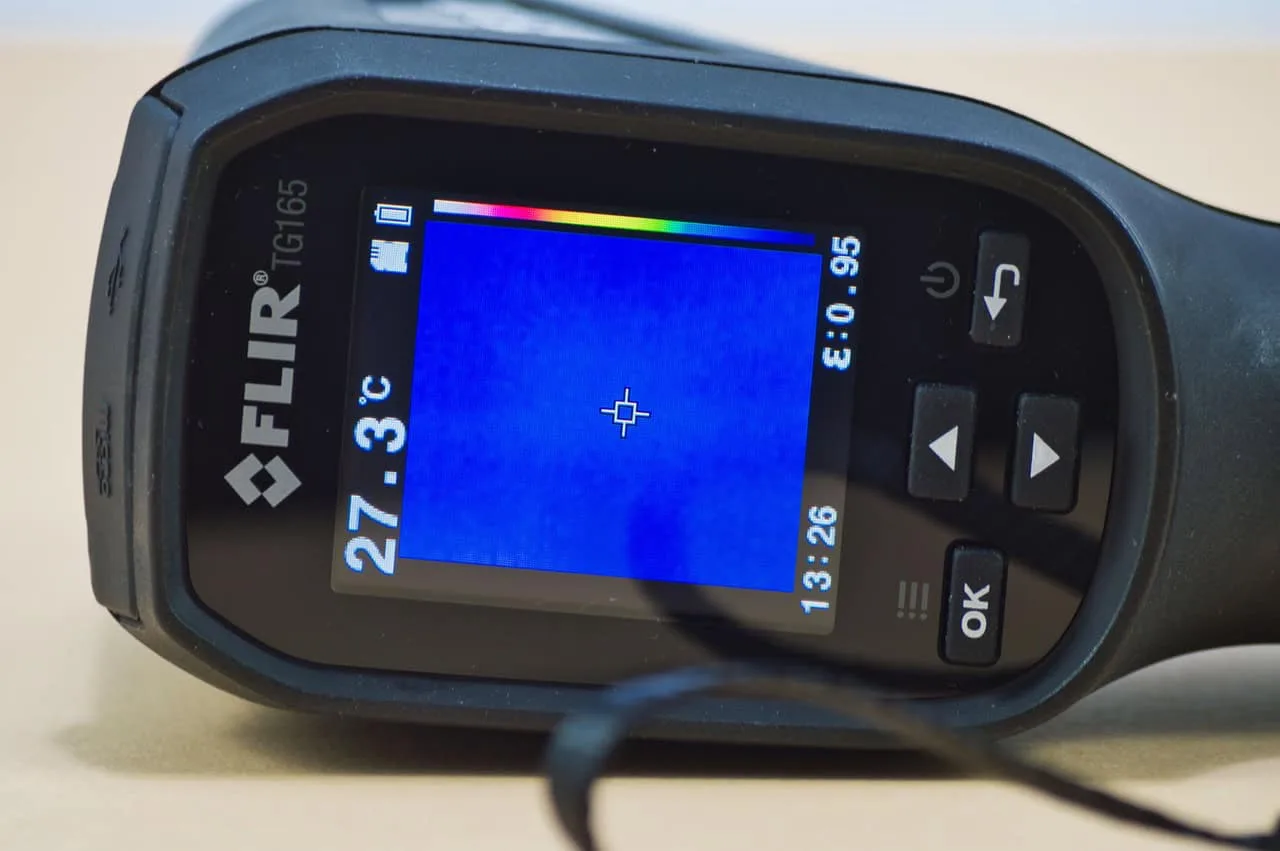

Vibration analysis is a key technique used in predictive maintenance to detect potential issues in rotating machinery. Another useful technique is thermography, which uses thermal imaging to identify hot spots that may indicate equipment problems.

In Summary

Predictive modeling algorithms are the cornerstone of data-driven decision-making. Each modeling technique, from linear regression to neural networks, has its own strengths and use cases. Data quality is still the most important factor in making accurate predictions. I’ve been an engineer who has implemented these models in a variety of industries, and they really are incredible if you use them properly. Again, the trick is selecting the right algorithm for the specific problem at hand and the data.