Histograms are a great way to visually see frequency distributions, which indicates data patterns. I’ve leveraged histograms significantly in condition monitoring to catch early equipment problems.

You’ll also find histograms helpful to identify trends, outliers, and general issues in your continuous improvement journey. Therefore, histograms are an excellent strategy to ensure you optimize efficiency in any process and achieve success.

Understanding Histograms

Histograms are excellent visualizations for data distributions, and I’ve leveraged them frequently throughout my engineering career to analyze equipment performance and diagnose issues. Essentially, histograms are just bar charts that illustrate the frequency of data points within a given range or “bin.”

The x-axis of a histogram represents the variable you are measuring (e.g., temperature, pressure, etc.), while the y-axis displays the frequency/count of data points within each bin. The bars visually represent this data distribution.

Histograms work best when you have at least 50 consecutive data points collected on something, either over time or from a specific population. Additionally, you need a continuous variable and clear bin ranges to leverage a histogram effectively.

The key benefit of a histogram is that it allows you to quickly identify patterns in a larger dataset. I’ve used histograms to identify key trends in equipment that weren’t immediately obvious by looking at the raw data alone.

To create a meaningful histogram, you need:

- At least 50 consecutive data points from a process

- Data collected over time or from a specific population

- A continuous variable (like temperature or weight)

- Properly defined bin ranges

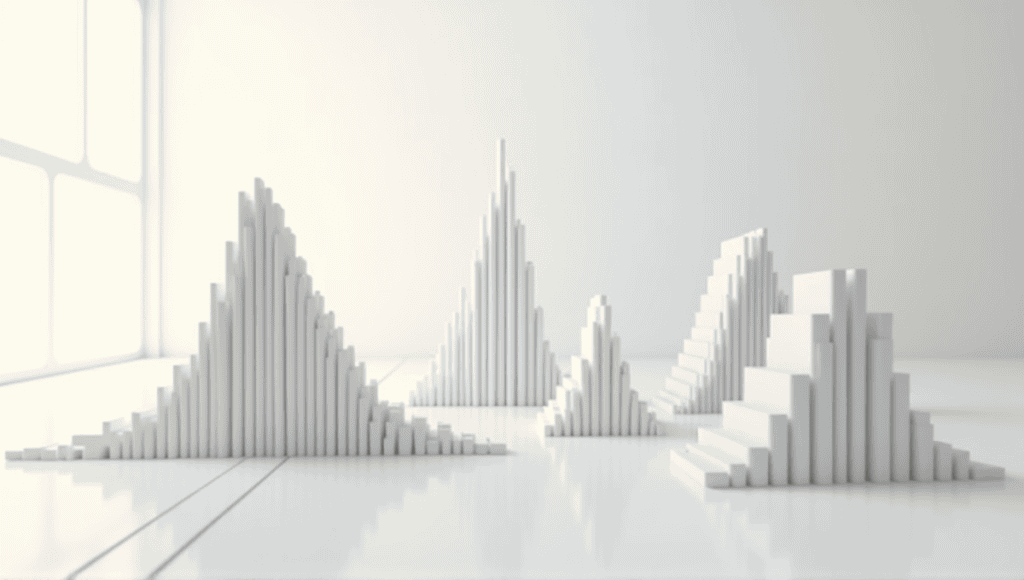

Types of Histogram Distributions

These are the most common histogram shapes I’ve seen while analyzing industrial data, and each one communicates a different story about the underlying process:

• Normal distribution: The data forms a classic bell curve. It’s symmetrical with the majority of the data clustered around the mean. I frequently see this in tightly controlled manufacturing processes.

• Skewed distribution: The peak of the data is not in the center. For a right-skewed distribution, the tail extends longer on the right side of the peak, while the left-skewed distribution has a longer tail on the left. I’ve observed a right-skewed distribution when analyzing equipment failure times.

• Bimodal distribution: The data appears to have two distinct peaks, like a two-humped camel. This is often a sign of two different processes or two different populations of data. I once came across this shape when analyzing vibration data from a machine that had two different operating modes.

• Uniform distribution: Each bar in the chart is about the same height. This is a very rare pattern to see in real-world processes, and if you observe it, it likely indicates there’s a problem.

• Exponential distribution: The frequency of the data is highest at one end and then tapers off sharply. This is often a shape you’ll see in time-between-events data, such as time between customer arrivals or time between equipment failures.

Understanding these shapes is critical because it helps you understand what the process is doing and where you should focus your improvement efforts.

Creating Histograms

Creating a histogram is a simple process:

- Collect your data.

- Decide how many bins you want.

- Calculate the bin width.

- Create a frequency distribution table.

- Draw the histogram.

Selecting the right bin size is important. Too few bins and the histogram won’t reveal key insights. Too many bins and the histogram will be too noisy. As a general rule, I shoot for 5-20 bins depending on the size of the dataset.

When choosing bin sizes, consider:

- The range of the data.

- The level of granularity you need.

- Sample size.

- What you’re trying to learn from the analysis.

Below are the tools I’ve used to create histograms over the years:

- Excel: Ideal for ad hoc analyses.

- R: Great for advanced statistical analyses.

- Python (matplotlib, seaborn): Perfect if you’re a data scientist and want a highly customized histogram.

- SPSS: User-friendly for non-coders.

Each tool has its own unique strengths, so select the one that best fits your needs and level of expertise.

Bin Selection Techniques

The right bin selection is crucial to a histogram’s accuracy. I can’t tell you how many times I’ve seen engineers misinterpret histograms because of poor binning choices.

There are a handful of rules you can use to select bin width:

- Sturges’ rule: k = 1 + 3.322 * log10(n)

- Rice rule: k = 2 * n^(1/3)

- Scott’s normal reference rule: h = 3.49 _ σ _ n^(-1/3)

- Freedman-Diaconis rule: h = 2 _ IQR _ n^(-1/3)

Where k is the number of bins, n is the sample size, h is the bin width, σ is the standard deviation, and IQR is the interquartile range.

The bin size has a major impact on how you interpret a histogram. If it’s too big, you lose details. If it’s too small, you add noise. I usually use Sturges’ rule as a starting point and adjust it based on the data.

Choose your method by looking at:

- How the data is distributed

- The sample size

- What you’re trying to accomplish with the analysis

Remember, these are just general rules. You have to use your judgment and the specific details of the data set to get bin selection right.

Interpreting Histograms

When interpreting histograms, keep the following in mind:

- Distribution shape: Is it normal, skewed, multimodal, etc.?

- Centering: Where is the peak? Is it at a central value?

- Spread: How wide is the data set?

- Outliers: Are there any isolated bars significantly distant from the main distribution?

Look for insights into the data set. Are there any odd peaks or valleys? Does the distribution shape make sense to the process that generated the data?

Common mistakes analysts make when interpreting histograms are:

- Assuming all data should be normally distributed

- Neglecting to check the axis scales

- Overanalyzing small variations in the bar heights

- Failing to consider the broader context of the data

To analyze histograms effectively:

- Compare them to historical data or benchmarks from the industry

- Think about the process you know generated the data

- Look for interactions with other variables

- Use statistical tests to confirm what you see in the data

Remember, while histograms help us learn things about the data, be careful not to over-interpret them. Always crosscheck histograms with other analyses and your process knowledge.

Unusual Histogram Patterns

In my experience, I’ve seen several unique histogram patterns that are typically associated with a particular process issue:

- Plateau distribution (multimodal): A plateau distribution is simply multiple normal distributions in a single chart. I’ve seen this when a process has products from multiple suppliers or operating conditions.

- Edge peak distribution: An edge peak distribution is when you see peaks along one or both edges. This often happens when the histogram is constructed improperly or the data collection is truncated.

- Comb distribution: A comb distribution is when you have alternating tall and short bars. This is typically caused by data that has been rounded. I once saw this in a process with a sensor that wasn’t precisely calibrated.

- Truncated distribution: A truncated distribution occurs when the data distribution is abruptly cut off on one end. This might indicate that an inspection from a supplier is separating products that are technically within specification limits from those that are slightly outside.

- Dog food distribution: In a dog food distribution, there are virtually no data points at the average, and all of the data clusters at the specification limits. This often occurs when operators are manipulating the process to just to meet specs.

Each of these patterns is a major red flag. If I ever see one of them, I immediately begin digging into the process or data collection methods.

Applications of Histograms

Histograms are useful in various industries:

- Data analysis and exploration: An easy way to make sense of thousands or even millions of data points at once

- Manufacturing for quality control: A great technique to easily spot process variations and outliers

- Financial markets for market analysis: An effective method to visualize price distributions and trading volume

- Environmental the environmental sciences: An effective method for analyzing pollution data or the distribution of a species

- Educational: Use in educational research to analyze the distribution of test scores

In my own career, I’ve leveraged histograms to:

- Optimize maintenance schedules for equipment

- Pinpoint sources of defects in a product

- Understand patterns in energy consumption

- Set more accurate process control limits

Their flexibility truly makes them one of the most valuable tools in any data business.

Histograms in Financial Analysis

Histograms are essential in financial analysis. For example, in technical analysis, the MACD histogram shows the difference between the MACD line and the signal line. It’s a great tool for analyzing the strength of a trend and potential trend reversals.

In trading, volume histograms display the volume of trades executed at different price levels. I’ve used these histograms to identify important support and resistance levels.

Price distribution histograms help you visualize how an asset’s price has moved over time. I often use these when pricing options and assessing risk.

Why are histograms useful in making better financial decisions?

- You can visualize data distributions in seconds.

- You can spot outliers and anomalies.

- You can compare multiple datasets.

- You can discover patterns you wouldn’t otherwise notice from the raw data.

Advantages of histograms in financial decision-making include:

- Visualizing data distributions quickly

- Identifying outliers and anomalies

- Comparing multiple datasets easily

- Revealing patterns not obvious in raw data

While histograms aren’t a crystal ball, they’re incredibly valuable to financial analysts and traders.

Historical Context of Histograms

The term “histogram” has an interesting history. Karl Pearson coined it during lectures at University College London in 1892. However, the concept of a histogram predates the name.

In 1786, William Playfair developed the practice of using bar graphs for statistical measurements, which is essentially what we do with histograms today.

Here are the key dates in the history of the histogram:

- 1786: William Playfair invents bar graphs.

- 1833: A.M. Guerry uses shaded rectangles to show data.

- 1869: Charles Joseph Minard creates flow maps with elements of a histogram.

- 1891: Karl Pearson coins the term “histogram” during a lecture.

- 1970s: Histograms become widely popular in quality control.

The rise of computing power in the 20th century made it possible for histograms to become more popular and to be analyzed in more complex ways.

Advanced Histogram Techniques

As data analysis has advanced, so have histogram techniques. Kernel density estimation is a more advanced method of density estimation that is smoother than a traditional histogram, though more complex. It’s best used for continuous data that you don’t know the distribution of.

Other more advanced techniques include:

- Cumulative frequency histograms: Displays the cumulative frequency of a dataset.

- 3D histograms: Allows you to understand the relationship between three variables.

- Interactive/dynamic histograms: Allows viewers to interact with the plot and explore their own data in real time.

- Comparative histograms: Allows you to compare more than one dataset in a single plot.

These advanced techniques provide more advanced insights, though analyzing data using these methods often requires more advanced software or statistical knowledge. In my experience, using these more advanced techniques is most helpful when analyzing more advanced, multivariate datasets or presenting to a more advanced, technical audience.

Signing Off

Histograms are excellent data analysis tools because they show patterns, insights, and potential problems in your processes. Histograms are used in everything from manufacturing to finance to analyze complex data sets.

Just be sure to use the correct bin selection and interpretation. With some practice, you’ll extract plenty of valuable insights from these visual data representations. But remember, histograms are more than just charts. They’re the secret to understanding and improving your processes.