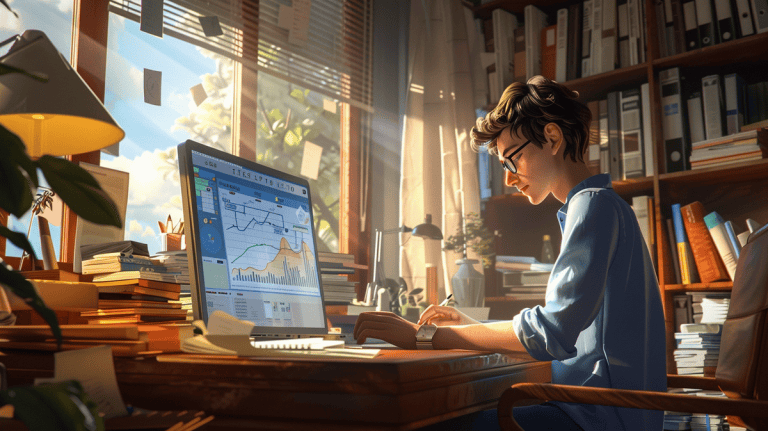

Regression analysis is a great tool for continuous improvement. I’ve personally applied regression analysis steps many times to improve processes and forecast results. So, how can you do regression analysis effectively? Here are the main steps that I’ve learned from years of doing regression analysis.

Regression Analysis Overview

Types of regression analysis include:

- Linear regression

- Multiple regression

- Logistic regression

- Polynomial regression

You can apply regression analysis across finance, marketing, healthcare and, of course, engineering. In my industry, we use it to predict when equipment will fail and optimize our maintenance schedules.

Key regression analysis concepts include:

- Dependent and independent variables

- Coefficients

- Residuals

- R-squared

- P-values

As you learn more about regression analysis, you’ll come across these terms frequently. They’re the building blocks you need to understand and interpret your regression analysis results.

Data Collection and Preparation

Data collection and preparation is the most important part of regression analysis. If you don’t have good data, you can’t build an accurate model. Many of the projects I’ve worked on failed to produce a useful model due to poor data quality.

To begin, identify the variables that are relevant to your research question. Then, gather data from a credible source and clean it. This involves removing duplicates, fixing errors, and handling missing data appropriately.

Outliers can have a big impact on the results of your model. Look at the data and determine whether there are any extreme values. Then, use your domain expertise to decide whether to remove or transform them.

Scaling and normalizing your data is a common step, especially if the variables are on different scales. This ensures each variable contributes equally to the model.

Finally, split the data into a training set and a test set. The training set is what you use to build the model and the test set is what you use to evaluate the model. A common split is 80% training and 20% testing.

Exploratory Data Analysis

Exploratory Data Analysis (EDA) is understanding your data before you start building a model. It’s something I never overlook.

Use scatter plots, histograms, and box plots to visualize the relationship between variables. These visualizations often reveal patterns and potential outliers.

Calculate basic statistics for each variable, such as the mean, median, and standard deviation. These statistics provide a brief overview of the data.

Check for potential correlations between variables. If independent variables are highly correlated, it can cause multicollinearity, which makes the model less stable.

Check the distribution of the variables. Many regression techniques assume the data is normally distributed. If the data is not normally distributed, you may need to transform the data or use an alternative regression technique.

Doing EDA will both save you time and prevent you from making an error later in the analysis, so don’t rush through this step.

Choosing the Appropriate Regression Model

Selecting the correct regression model is important because the model you select depends on your research question, data and the assumptions you’re comfortable making.

Here are the key considerations when selecting a model:

- The dependent variable’s data type (continuous, binary, count)

- The type of relationship you expect to see between the variables (linear, non-linear)

- The number of independent variables

- Your sample size

These are some of the most common regression models:

- Simple linear regression

- Multiple linear regression

- Logistic regression

- Polynomial regression

- Ridge regression

- Lasso regression

Each has its own strengths and weaknesses. For example, linear regression is easy to use and interpret, but it assumes a linear relationship. Logistic regression is specifically designed for binary outcomes. Polynomial regression can fit a non-linear relationship and add more flexibility, though it’s more likely to overfit.

Choose the model that best fits your specific situation. Don’t just go with a model because it’s common or you like using it.

Checking Regression Assumptions

The linearity assumption ensures that independent variables have a linear relationship with the dependent variable. You can check this by plotting residuals against predicted values.

Independence of observations means that each data point is independent from the others. This is usually determined based on your study design.

Homoscedasticity assumes that residuals have equal variance across all levels of the independent variables. You can check this using a scatterplot of residuals.

Another key assumption is normality of residuals. You can use a Q-Q plot to assess if residuals follow a normal distribution.

Multicollinearity checks that independent variables aren’t too similar to each other. You can check this using Variance Inflation Factors (VIF).

How to test assumptions:

- Visual inspection of plots

- Statistical tests (e.g., Shapiro-Wilk test for normality)

- Diagnostic statistics

If the assumptions are violated, you may need to transform the data or choose a different regression approach.

Model Fitting and Parameter Estimation

After selecting a model and validating assumptions, the next step is to fit the model to your data. During this process, you determine the parameters that most accurately describe the relationship between your variables.

The most common method for linear regression is Ordinary Least Squares (OLS). OLS minimizes the sum of the squared residuals, and I’ve found it to be a reliable option for most use cases.

Maximum Likelihood Estimation (MLE) is commonly used for logistic regression. It selects the parameter values that make the observed data most likely.

Gradient Descent is an optimization algorithm used in more advanced models. It continuously adjusts the parameters to minimize the error.

Regularization, such as Ridge or Lasso regression, is a technique to prevent overfitting. It adds a penalty to the loss function, discouraging overly complex models.

Stepwise regression techniques automatically select the variables in the model. While this handy, stepwise regression can sometimes lead to overfitting or exclude key variables.

Remember fitting a model is an iterative process. You may need to experiment with a few different fitting strategies to find the best fit for the data.

Interpreting Regression Results

The interpretation of regression results is where you really flex your expertise. After all, you aren’t just looking at numbers – you’re understanding what those numbers mean in the context of your research.

Regression coefficients: These tell you how much the dependent variable changes when the independent variable increases by one unit. In other words, regression coefficients tell you the direction and strength of the relationships in your data.

P-values: These tell you the statistical significance of your results. If the p-value is small (usually < 0.05), the relationship is probably not due to chance.

Confidence intervals: These tell you a range of reasonable values for the population parameters. Confidence intervals tell you the precision of your estimates.

R-squared: This statistic tells you the percentage of the variance in the dependent variable explained by your model. A higher R-squared is better, but be careful not to overfit.

F-statistic: This statistic tells you the overall significance of your model. In other words, can your model explain the dependent variable better than a model with no predictors?

How to determine the importance of individual predictors: Use t-statistics, standardized coefficients, and partial R-squared values.

However, remember that just because a relationship is statistically significant doesn’t mean it’s practically significant. As you’re interpreting these statistics, use your domain knowledge to understand what the statistics are telling you.]

Model Validation and Diagnostics

Residual analysis is the process of examining the differences between the observed and predicted values. By plotting the residuals, you can identify any patterns that suggest your model isn’t great.

Check for influential observations and outliers that may be driving your model results. Cook’s distance is helpful for identifying influential points.

Cross-validation methods, such as k-fold cross-validation, will help you determine how well your model will perform on new data. These are a more robust estimate of model performance than just doing a single train-test split.

Use MSE, R-squared, or MAE to evaluate model fit and how well the model makes predictions. You can compare these values across different models to decide which model is best.

When comparing nested models, use statistical tests, such as the likelihood ratio test or F-test to determine if adding additional complexity to the model is worth the improved model performance.

Keep in mind that you’ll likely never find a perfect model. Instead, the key is finding a model that achieves the right balance of simplicity and predictive power and meets your research objectives.

Signing Off

Regression analysis is a versatile method for understanding variable relationships, and I’ve seen it used to great effect in various industries, from predicting machinery breakdowns to improving business operations.

You now know how to use regression analysis effectively. Just be sure to select the correct model, validate assumptions, and analyze the results with precision. With some experience, you’ll find key insights in your data. With careful execution, regression analysis can generate significant improvements.