Reliability analysis is key to verifying product and system performance. I’ve dedicated much of my career to helping businesses improve processes and understand when things can go wrong.

In this post, we’ll discuss how reliability analysis is the secret weapon to predict and prevent failures. You’ll discover the various techniques engineers and analysts use to evaluate reliability and why it should matter to your business.

Fundamentals of Reliability analysis

Reliability analysis assesses the trustworthiness and consistency of a measurement scale, as well as the predictability or prevention of failures in a system, product, or process. I’ve applied reliability analysis principles to various industries over the years.

The MTBF (mean time between failures) is perhaps the most important reliability metric. It represents the average time that a system operates before a failure occurs. Higher MTBF values are better, as they represent higher reliability. The failure rate is another important reliability concept, representing how frequently failures occur over time.

Reliability prediction models use statistical techniques to predict the future performance of a system. These model are critical for making data-driven decisions about when to perform maintenance and when to replace a system.

In my opinion, reliability analysis is critical in industries like:

- Aerospace

- Manufacturing

- Healthcare

- Power generation

The data types reliability analysis uses include:

- Time-to-failure data.

- Failure count data.

- Degradation data.

- Environmental stress data. Understanding this basics is essential for anyone interested in improving a system’s performance and reducing costly downtime.

Methods of Analysis

There are four main reliability analysis methods, each of which has a specific purpose to assess measurement consistency.

Split-half reliability involves dividing a test into two halves and then comparing the scores from each half. Use this method when working with tests containing many interchangeable items.

Inter-rater reliability compares agreement among different raters. You should use this method when the test requires human judgment. I’ve personally used this method to evaluate employee performance systems.

Test-retest reliability means giving the exact same test to the same group twice. If the correlation between the scores is high, you can consider the test reliable over time. A correlation of 0.80 or higher is generally considered good reliability.

Parallel forms reliability uses two different yet equivalent forms of a test and then compares the results between the two to check for consistency.

Here’s a quick comparison of these four methods:

| Method | Pros | Cons |

|---|---|---|

| Split-half | Simple to use | Assumes the two halves of the test are equally difficult |

| Inter-rater | Ideal for subjective tests | Requires you to train the raters |

| Test-retest | Assesses how consistent the test is over time | Practice effects (people will remember the answers) |

| Parallel forms | Reduces practice effects | Can be difficult to create truly equivalent forms |

The method you choose will depend on your specific needs and constraints.

Classical Test Theory in Reliability Analysis

Classical Test Theory (CTT) is the basis for many reliability calculations. It assumes measurement errors are random in nature.

In CTT, the observed score variance is the sum of the true score variance and the error variance. Mathematically, this relationship is denoted as:

Observed Score Variance = True Score Variance + Error Variance

The reliability coefficient is the central concept in CTT. It’s defined as the ratio of the true score variance to the total score variance. It’s bounded between 0 and 1, and higher values signal higher reliability.

CTT has some limitations. It assumes measurement error is the same at all levels of the score. In my experience, this isn’t always the case. Low and high scorers may have different patterns of measurement error.

While CTT isn’t a perfect theory, it’s still applicable to many problems. It’s a very basic theory, so many different types of people can use it.

Item Response Theory and Reliability Analysis

Item Response Theory (IRT) is a more advanced reliability analysis. It expands the idea of reliability from a single number to an information function.

Unlike CTT, IRT looks at item-level properties. This allows for much more accurate measurement, particularly at different ability levels.

In IRT, the information function indicates how much you’re measuring at different trait levels. This is especially helpful if you measure people with different abilities.

IRT has a few key benefits:

More accurate estimates of item difficulty.

Better handling of missing data.

You can build adaptive tests.

However, IRT is more difficult to use. It requires larger sample sizes, more complex statistical models, and the organizational clients I’ve worked with often require significant training to use IRT effectively.

Analysis in Engineering Systems

Engineering systems require to conduct rigorous reliability analysis. Tidal energy systems are an interesting case study.

For tidal energy, the water needs to flow at an average speed of at least 2 m/s to generate power. Any flow speed less than 2 m/s or greater than 5 m/s is considered inadequate for generating power. This highlights the importance of reliable environmental data.

Key factors affecting reliability in engineering systems are:

- Material property data

- Environmental data

- Maintenance data

- System complexity data

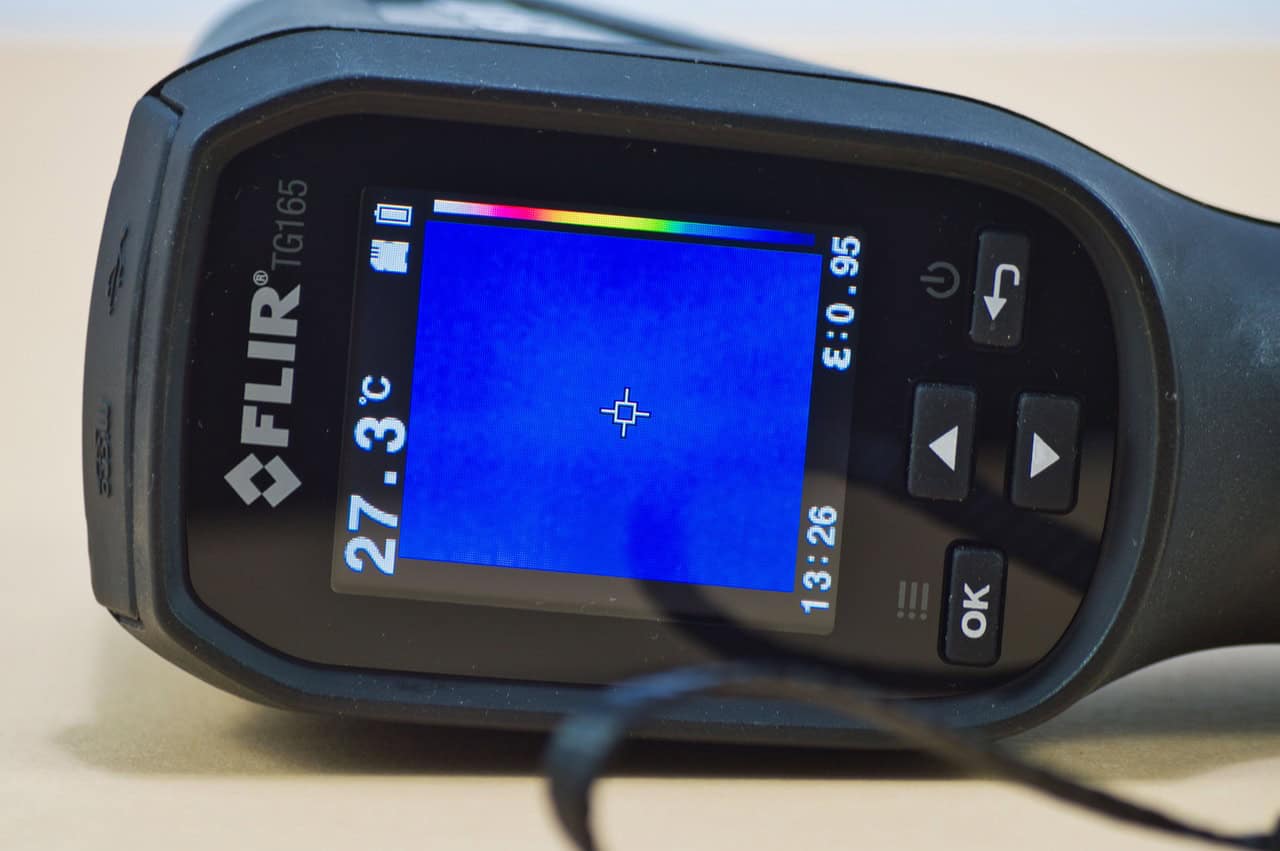

Common reliability analysis techniques in engineering are:

- Fault Tree Analysis

- Failure Mode and Effects Analysis (FMEA)

- Reliability Block Diagrams

- Monte Carlo Simulation

Applying Lean Innovation principles can make your reliability analysis more effective. If it doesn’t add value or adds complexity to the system, eliminate it.

System Effectiveness and Reliability

System effectiveness is more than just reliability; it also considers how well a system does the job it’s intended to do under given conditions.

effectiveness can be calculated using this formula:

System Effectiveness = Operational Readiness x Mission Reliability x Design Adequacy

Operational readiness is the system’s availability when we need it. Mission reliability is the likelihood that the mission will be successfully completed. Design adequacy is the extent to which the system meets the specifications.

And each of these factors is directly influenced by reliability analysis. So when we improve reliability, we improve system effectiveness.

Strategies to improve system effectiveness through reliability analysis include:

Identifying and solving weak points in the system

Optimizing maintenance schedules

Increasing fault tolerance

Raising the quality of components

Through my model, I’ve seen that even small increases in reliability can yield a large increase in system effectiveness.

Human Analysis

Human error is a major contributor to system reliability. Thus, understanding human error and how to prevent it is critical.

Common types of human errors include:

- Omission: Failing to do a required action

- Commission: Doing an action incorrectly

- Intentional: Deliberate violation of procedures

Human Reliability Analysis (HRA) methods have evolved, and today we can classify them as first, second, and third generation methods.

First generation methods examine the sequence of actions people take, and often use task analysis to identify points of the procedure where something can go wrong.

Second generation methods instead focus on the cognitive processes through which the person goes. They think about the person’s workload and situational awareness.

Third generation methods examine dependencies in the human factor. Rather than just documenting corrective actions to take, the main goal is to understand how mistakes in one part of a system often propagate to cause problems elsewhere.

From my experience, combining HRA with a quantitative analysis of technical system reliability yields a much more accurate understanding of how the system will perform and a higher degree of robustness.

Conclusion

Reliability analysis is one of the most powerful process and systems improvement tools. I’ve seen it make a big difference in almost every industry, from manufacturing to software engineering. If you grasp MTBF and similar concepts and use test-retest reliability and other methods,

you will improve your operations. Just keep in mind that reliability isn’t purely numbers. It’s about instilling confidence in your systems and products. The takeaways from reliability analysis will help you optimize throughput, eliminate headaches, and achieve CI success.