I’ve been in the condition monitoring industry for decades. When it comes to predictive maintenance, accuracy and precision are paramount. However, accuracy and precision are not the same thing, despite the fact that many people use the terms interchangeably. Accuracy refers to getting close to the bullseye, while precision refers to getting the same answer again and again.

In this post, I’ll discuss the differences and why they’re important to your equipment’s health and lifespan. You’ll also discover how to make better sense of measurement data.

Defining Accuracy and Precision

Accuracy refers to how close your results are to the true value or a known value. In other words, it refers to how correct your results are. In my experience working with condition monitoring systems, accuracy is essential. If our sensors aren’t accurate, we may fail to catch critical equipment failures.

On the other hand, precision refers to how close your results are to each other. In simpler terms, it refers to how consistent your results are. You can have high precision without any accuracy. Just think about throwing darts at a dartboard. You might be hitting the same spot over and over again, but that spot is not the bullseye. That means you have precision without accuracy.

The relationship between accuracy and precision is nuanced. They are not opposites, but they aren’t the same thing either. They are related and feed off of each other, yet they accomplish different things. Ultimately, you need both of them to obtain reliable measurements.

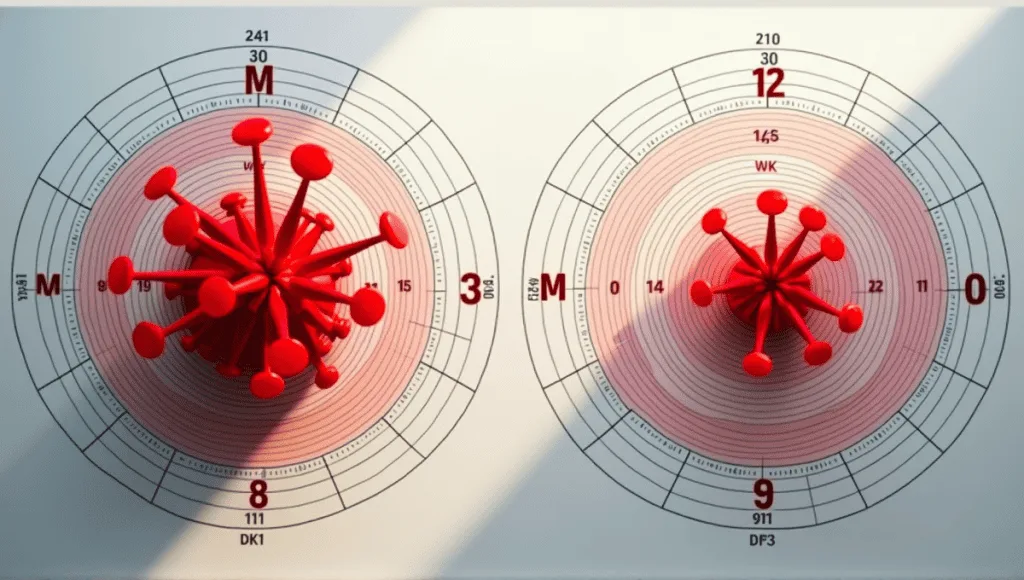

Here’s a useful visual using a target:

- Center of the target: True value

- Cluster of hits: High precision

- Hits near the center: High accuracy

- Spread of hits: Low precision

- Hits away from the center: Low accuracy

Understanding these two concepts is critical for anyone working with data or measurements. You’ll come across various scenarios where one of these concepts may be more important than the other, depending on what you are trying to achieve.

Historical Context and Evolution of Terms

The concepts of accuracy and precision have been around as scientific terms for centuries, but their definitions have changed over time. Early scientific measurements were limited by the available technology, so achieving precision was difficult. Accordingly, the distinction between accuracy and precision wasn’t as important.

However, as technology improved and we could measure more precisely, the concepts of accuracy and precision became more distinct from one another. The ISO 5725 series publication in 1994 was a turning point in defining accuracy and precision as we know them today.

It was the first document that drew these definitions together from various industries and scientific disciplines because each industry and scientific discipline used slightly different definitions. The ISO standards specified that accuracy can refer to both trueness (closeness to the true value) and precision.

This broader definition was a change from historical interpretations, but it allowed us to be more precise in our definitions of each term. Today, the scientific community largely agrees on these definitions. However, in casual conversation, people still often say “accuracy” when they mean “precision” and vice versa.

In my consulting work, I’ve seen the impact of this evolution on industrial practices. Companies have had to adjust how they determine if a process is on target and their entire quality control process in general to match these more accurate definitions of accuracy and precision. It’s more than just a simple language change – the definition of accuracy and precision impacts how we interpret data and make decisions.

Measuring Accuracy and Precision

For accuracy in binary classification, use this formula:

Accuracy = (TP + TN) / (TP + TN + FP + FN)

Where:

- TP = True Positives

- TN = True Negatives

- FP = False Positives

- FN = False Negatives

This formula gives you a percentage, representing how often your model is correct. This is helpful, but it doesn’t tell you what types of errors your model is making.

Precision is often calculated using standard deviation. In Engineering, we often define precision as three times the standard deviation. This allows us to define a range where roughly 99.7% of our measurements fall.

Other applicable metrics are:

- Mean Absolute Error (MAE)

- Root Mean Squared Error (RMSE)

- F1 Score

- Area Under Receiver Operating Characteristic Curve (AUC ROC)

You’ll find these metrics in many different fields. In my case, working in predictive maintenance, we generally use RMSE to evaluate how our models are performing. The benefit of the RMSE is that it penalizes larger errors more, and this is important when you are predicting equipment failure events. For further reading on analyzing predictive models, check out root cause analysis.

Keep in mind that the best metric to use will depend on the specific problem you are solving. There is no one size fits all solution.

Factors Affecting Accuracy and Precision

When discussing accuracy and precision, there are various factors at play that can impact the measurements. Let’s break it down.

Resolution is one of the most important factors. It refers to the smallest change in the measured quantity that the instrument can detect. A higher resolution allows you to detect smaller variations and can therefore improve accuracy and precision. In a previous job, I worked with sensors that could detect changes in machinery vibration at the micron level. This high resolution was critical for detecting machine faults early.

Another important concept to understand is the margin of error. This is typically defined as half of the value of the last significant place in your measurement. For example, if you measure 10.5 cm with a ruler that is marked in millimeters, your margin of error is 0.05 cm.

There are also environmental factors that can impact measurements, including:

- Temperature

- Humidity

- Electromagnetic interference

- Vibration

There are also human factors that play a role in measurements:

- Operator’s skill

- Operator fatigue

- Operator bias

- Operator’s level of training

I’ve seen many examples in my career where these factors have impacted measurements. For example, one client of mine was experiencing erratic readings on their vibration monitoring system. After extensive troubleshooting, we discovered that the readings were being impacted by electromagnetic interference from a neighboring piece of equipment. This is a classic example of an environmental factor impacting precision.

Repeatability and Reproducibility

Repeatability refers to the variation in measurements from a single person or instrument under the same conditions. It’s about obtaining consistent measurements when you repeat the measurement. We commonly evaluate repeatability in our work by asking the same operator to measure the same piece of equipment multiple times.

Reproducibility, on the other hand, refers to the variation in measurements from different people, instruments, or over longer periods of time. It’s about obtaining consistent measurements when the conditions change. This is important if you’re conducting scientific research and want other laboratories to be able to reproduce your results.

These concepts help us think about precision. If you have high repeatability and reproducibility, you have high precision. This is particularly important if you’re conducting scientific research and ensuring quality control.

Real world examples:

- Manufacturing: Ensuring products consistently meet specifications

- Medical research: Ensuring your experimental results are consistent when other labs run the experiment

- Environmental monitoring: Comparing pollution measurements across different stations

Whenever I do consulting work, I emphasize the importance of both repeatability and reproducibility. It’s the foundation of building a reliable condition monitoring system.

Improving Accuracy and Precision

Improving accuracy and precision is a continuous effort in any measurement-intensive industry. Here are the main strategies I’ve employed in various measurement industries.

Calibration is everything. It ensures your instruments are measuring accurately. The frequency of calibration varies depending on your equipment and the application, but you should always calibrate regularly.

Common error reduction strategies include:

- Multiple measurements and averaging

- Controlling the environment

- Establishing comprehensive operator training

- Statistical process control

Technology has evolved significantly to help us make more accurate and precise measurements. The key advancements are:

- Sensors with higher resolution

- Better algorithms for processing data

- Automated systems for calibrating equipment

- Machine learning to detect errors

Best practices are different in each industry, but there are some best practices that apply across the board:

- Document all procedures in detail

- Use the right statistical techniques

- Regularly assess and validate measurement systems

- Invest in operator training

In my experience, the key to better accuracy is being diligent. You can’t set up a system and ignore it. You need to constantly monitor and adjust it.

Applications in Data Science and Machine Learning

For example, in convolutional neural networks, we use Top-1 and Top-5 accuracy. Top-1 accuracy evaluates whether the model’s top prediction is the correct label. Top-5 accuracy checks if the correct label is in the model’s top five predictions. These are common accuracy metrics in image classification tasks.

Another example is precision in information retrieval. It measures the fraction of relevant documents in the results that are correctly labeled as relevant. Precision is an important metric in search algorithms and recommendation systems. If a search algorithm returns a lot of irrelevant results, it has low precision.

Another related metric is recall, which measures the fraction of relevant documents in the document set that the algorithm successfully retrieves. There’s often a tradeoff between precision and recall.

Finding the right tradeoff between accuracy and precision in AI models is a challenging problem. It often requires tuning hyperparameters, figuring out the right model architecture, and other intricacies of the model itself. In my consulting work with clients on predictive maintenance algorithms, a common problem is managing the tradeoff of how the business will respond to a false positive versus the harm caused by missing a failure.

It’s important to understand these concepts as a data scientist. For example, a very precise spam filter might filter out all of the spam emails, but at the cost of also filtering out some legitimate emails. Alternately, a high-recall system might find all of the relevant documents, but it also includes a lot of irrelevant documents. The ideal balance depends on your specific use case.

Real-world Implications and Case Studies

Scientific research is one of the most obvious applications. For example, accurate and precise instruments are essential for measuring climate change. Otherwise, inaccurate data could lead to incorrect conclusions about the direction in which global temperatures are trending.

Industrial quality control is another obvious example. If you’re manufacturing a car, the parts need to be manufactured with a high degree of precision. Even a single seemingly tiny part being slightly off can impact the safety and performance of the vehicle.

Medical diagnostics is perhaps one of the most critical applications. A medical lab test must be accurate and precise; otherwise, the doctors will make incorrect diagnoses and treatment decisions. Similarly, a blood glucose meter needs to be accurate and precise; otherwise, the patient with diabetes can’t trust the results.

Accuracy is often more important than precision in financial forecasting. For example, an analyst might choose a model that isn’t the most precise but is the most accurate. If an analyst’s stock market model isn’t accurate, they’re out of a job, even if it’s a very precise model.

Lessons from these case studies:

- Calibration and validation are key.

- Understanding measurement limitations is crucial.

- Combining multiple measurement techniques can increase accuracy and precision.

- The importance of data interpretation skills.

In my own career, I’ve seen these principles in action time and time again. Whether you’re analyzing data from scientific instruments or analyzing financial data, understanding accuracy and precision is key to making quality decisions.

In Closing

Accuracy and precision are the foundation of any trustworthy measurement or data analysis. I’ve seen the significance of accuracy and precision at play in everything from industrial processes to scientific research. Congratulations! You now know the definitions, historical background, and real-world applications of accuracy and precision.

Just keep in mind that just because data is accurate doesn’t mean it’s precise, and vice versa. Aim for both of them in your work. Use these concepts to improve your measurements, experiments, and data analysis. Your skill to identify and improve accuracy and precision will result in more reliable data and better decision making.